Over the past decade, research in the NUAI lab is paving a path to revolutionize the next generation of intelligent platforms. We strive to achieve this through an interdisciplinary research approach. The fundamental questions that we strive to answer include:

Our team has developed new brain-inspired algorithms, AI platforms, and energy efficient machine learning substrates. Our research lab takes pride in fostering a highly stimulating student-centric research environment.

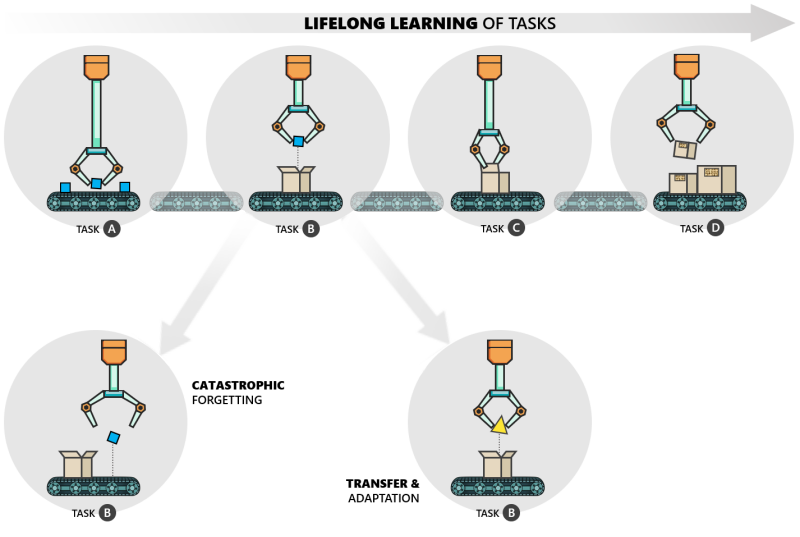

For machines to successfully operate in the real world and interact with their environment, they need to be able to learn throughout their deployment, acquire new skills without compromising old ones, adapt to changes, and apply previously learned knowledge to new tasks - all while conserving limited resources such as computing power, memory and energy. These capabilities are collectively known as Lifelong Learning. We take inspiration from biological processes and neural architectures and develop algorithms to overcome these challenges of lifelong learning.

The lab focuses on both development of algorithms as well as supporting hardware architectures to facilitate lifelong learning. Algorithms incorporating Neurogenesis and Neuromodulation are applied to deep learning and reinforcement learning models, whereas Metaplasticity has been applied to spiking neural network models.

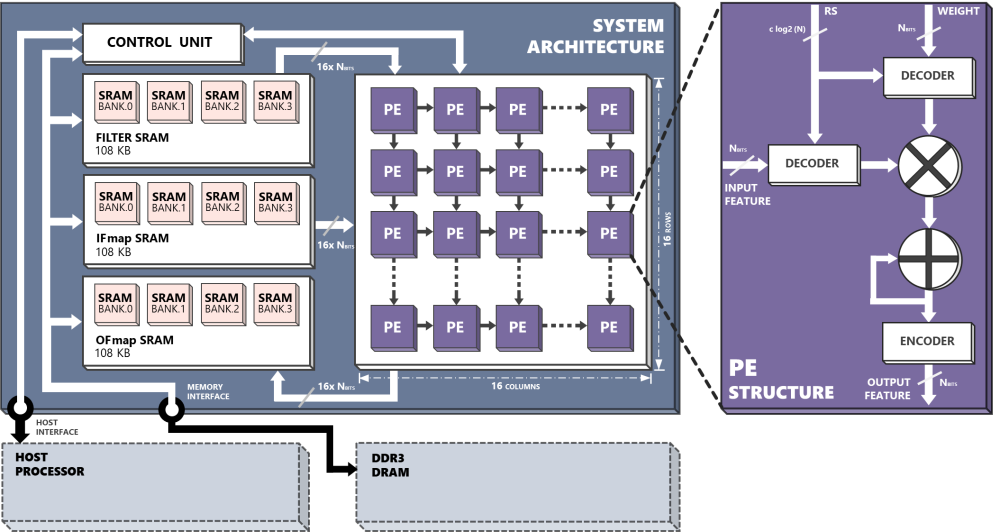

A vast array of devices, ranging from industrial robots to self-driven cars or smartphones, require increasingly sophisticated processing of real-world input data (image, voice, video, etc). Dedicated hardware neural network accelerators are emerging again as attractive candidate architectures for such tasks, as energy constrained envioronments are forcing researchers to turn away from traditional GPU and cloud based systems and adopting custom circuit design which can efficiently compute these DNNs at low power budgets.

The lab focuses on designing Digital and Mixed-Signal Accelerators for AI tasks, leading to device fabrication and evaluation. Furthermore, implementing custom architectures of neuromorphic algorithms yield further benefits with respect to compute and power consumption.

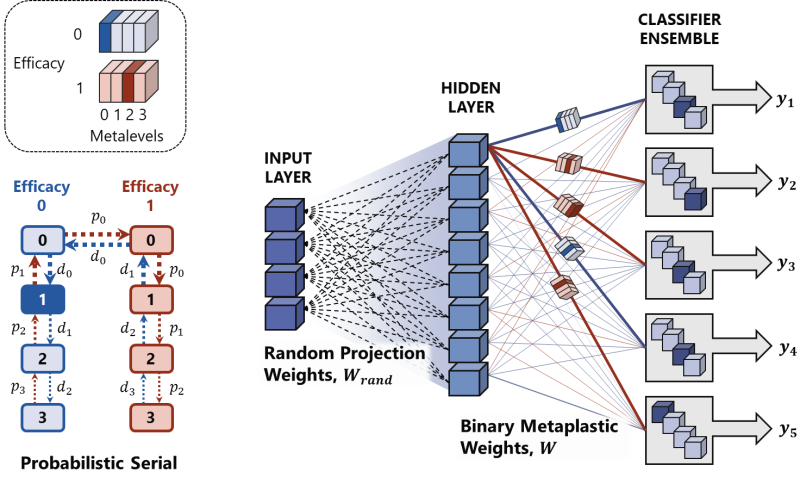

Spiking neural networks operate on biologically-plausible neurons and use discrete event-driven spikes to compute and transmit information. Though a substantial cortical processing uses spikes, we currently lack in-depth understanding of how we can instantiate such capabilities in-silico. Here, we investigate how populations of spiking neurons compute and communicate information, with different plasticity mechanisms such as short-term/long-term potentiation, neurogenesis, intrinsic plasticity, and attention. We posit using the neural computing substrates yield robust information processing and energy efficiency, for machine learning problems. Furthermore, we study how these networks can be realized efficiently on silicon substrates.

The lab studies various approaches for targeting spiking network architectures including Metaplasticity, the activity-dependent modification of synaptic plasticity, is an important technique for mitigating catastrophic forgetting in neural networks.

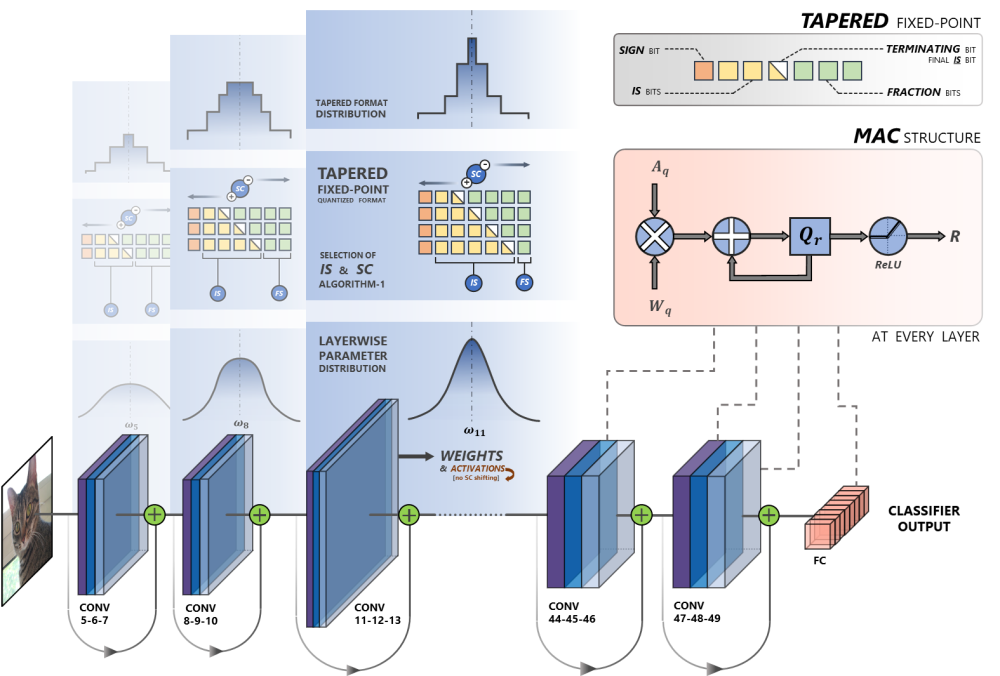

As AI models scale, the number of parameters needing to be stored, processed, computed and communicated also scale exponentially. Thus, the lab focuses on Model Compression algorithms, to make AI systems more efficient. Lab research focuses on compute optimization methods like Tensor Decomposition, which break down a tensor of model weights into multiple components, which reduces the number of FLOPs required for inference.

The lab has also pioneered research involving data compression via exploiting variable / adaptive / reduced bit-precision using an alternative numerical format - POSIT. Low-precision (≤ 16-bit width) arithmetic is a discernible approach to deploying deep learning networks onto edge devices and the performance of low-precision deep learning models is heavily relied on selecting the appropriate numerical format. Lab research evaluates the effectiveness of posit numerical format over other numerical formats through developing hardware and software frameworks.

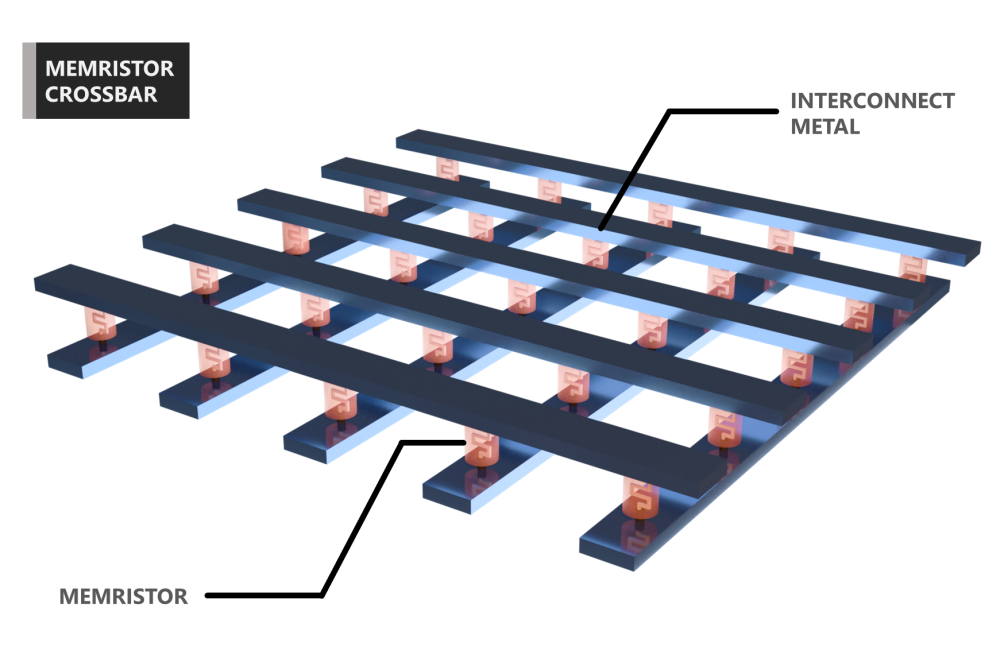

When designing neuromorphic systems, a significant challenge is how to build physical neuronal substrates with adaptive learning. Recently, non-volatile memory devices with history dependent conductance modulation (memristors) are demonstrated to be ideal for synaptic and neuronal operations. We propose hybrid CMOS/Memristor architectures, known as Neuromemristive systems, with on-device learning. There are multiple aspects we are interested in this study:

What memristor features are suitable for spiking/non-spiking networks?

How can we design learning rules that exploit variability in the crossbars?

How to train the memristors faster?

While the cloud has quickly become indispensable technology, challenges around latency, cost and complexity has cleared the way for something else to drive connected technology innovation: Edge Computing.

Emerging advancements in embedded hardware and neuromorphic accelerators fuel the possibilities for artificial intelligence (AI) on the edge. Edge devices and gateways-to-edge devices are now more powerful and enable the local collection, storage and analysis of data without waiting for value to be derived from the cloud and then passed back to the device.

The NUAI lab members engage in innovative hardware and software design to handle evolving edge computing needs, while developing technologies for real-time medical sensor analytics, virtual unsupervised drone exploration, security camera video activity/anomaly detection, simulated robotics and more. This is critically important as edge AI is truly changing the game.